Predicting Outcomes from DonorsChoose.org Applications

Python, Datamining, Classification

A semester long capstone project for the University's School of Information Master's degree program. Will be updated as benchmarks are reached throughout the semester.

Classifying Movie Genres from Summary Text

Python, Natural Language Processing, Machine Learning

Final project for SI 630 - Algorithms and Natural Language Processing. Will be updated as progress is made throughout the semester.

Analyzing/Predicting Severe Weather From Historical Data

Python, Datamining

This project is a cumulative assesment of course content covered in SI671 - Dataming. Parameters for the project were fairly loose - we were asked to locate a data source we considered interesting, and apply a variety of analysis in order to create some sort of predictive method. Evaluation was based on the quality of the analysis, as well as a type final report covering a project overview, methods, evaluation, and conclusions.

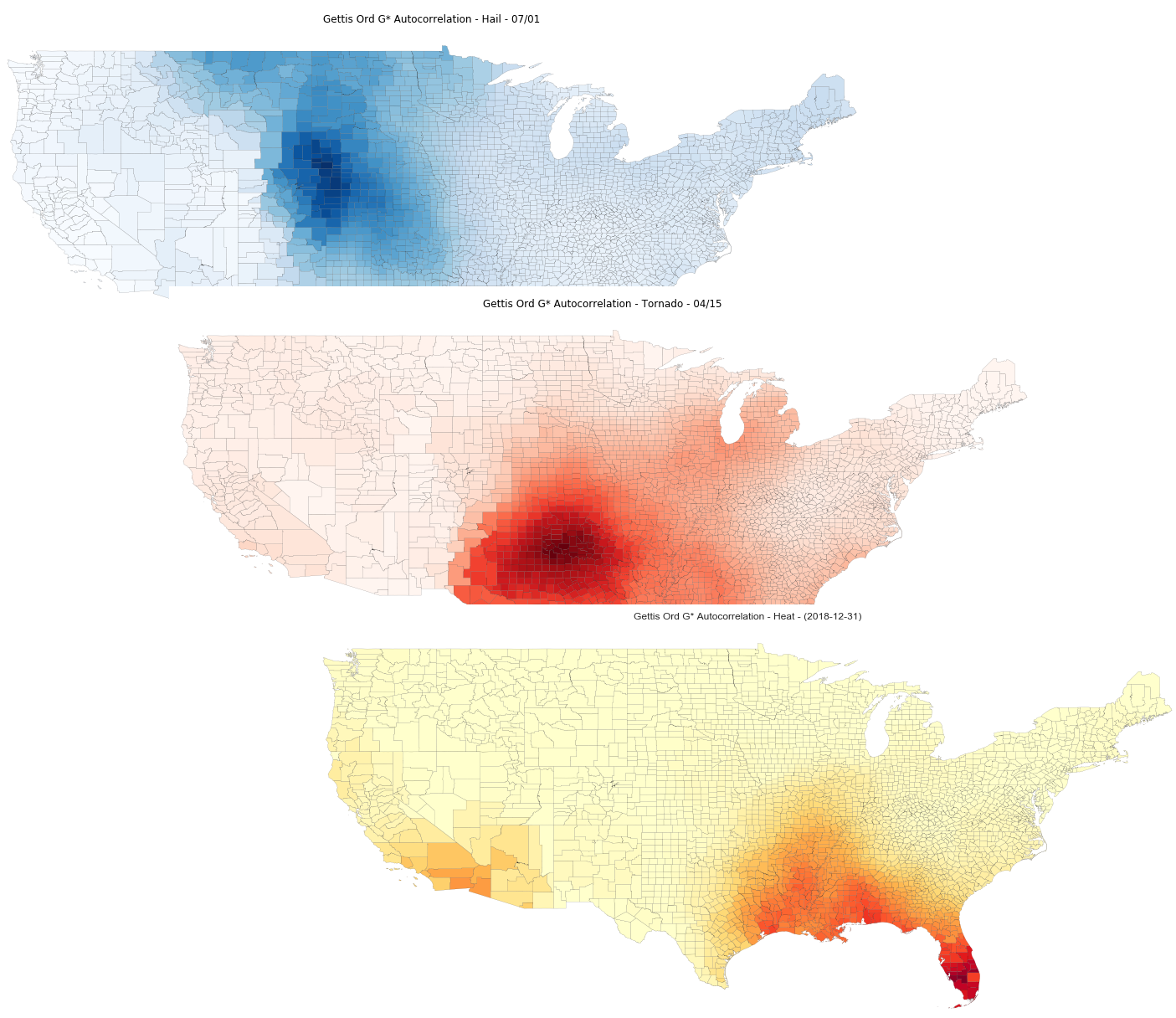

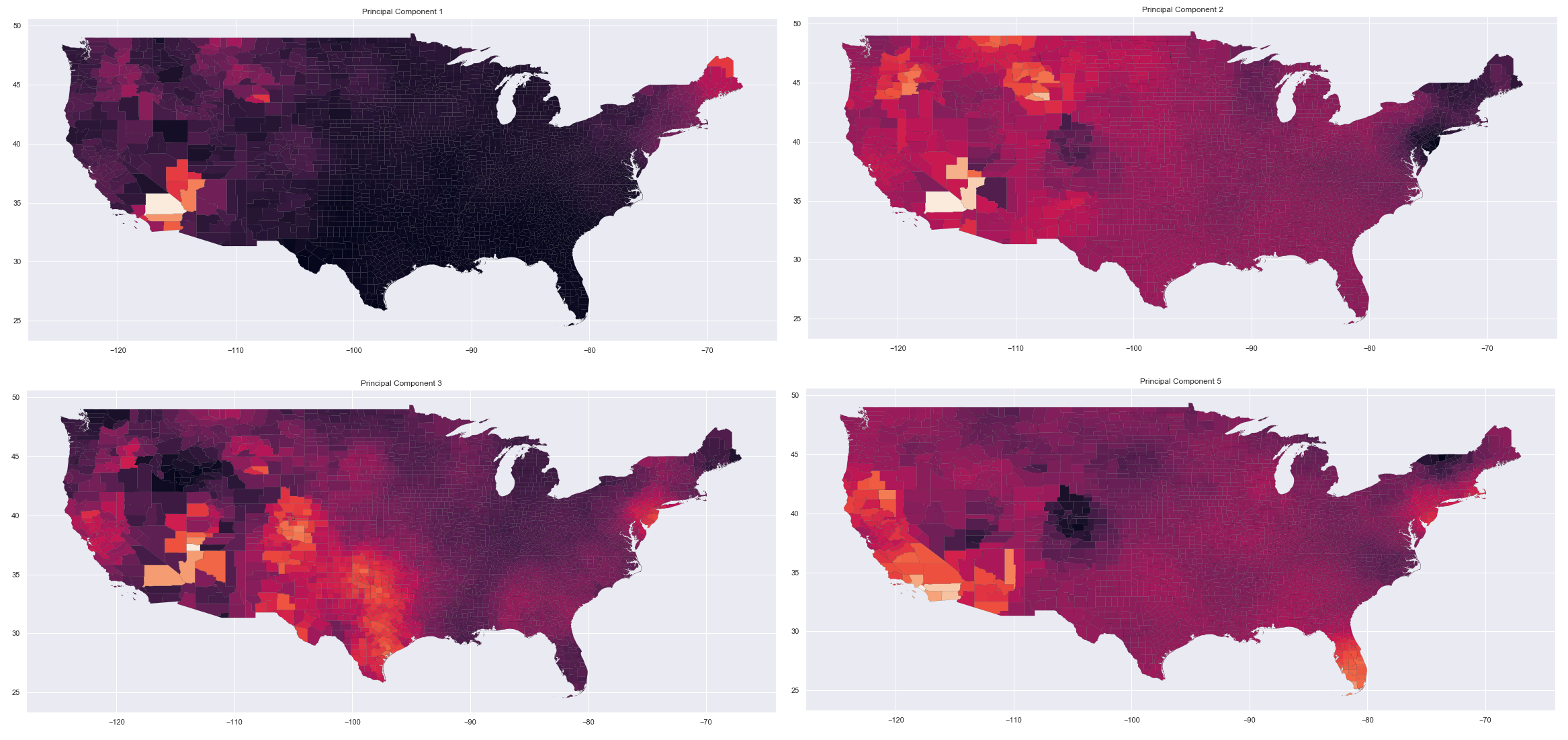

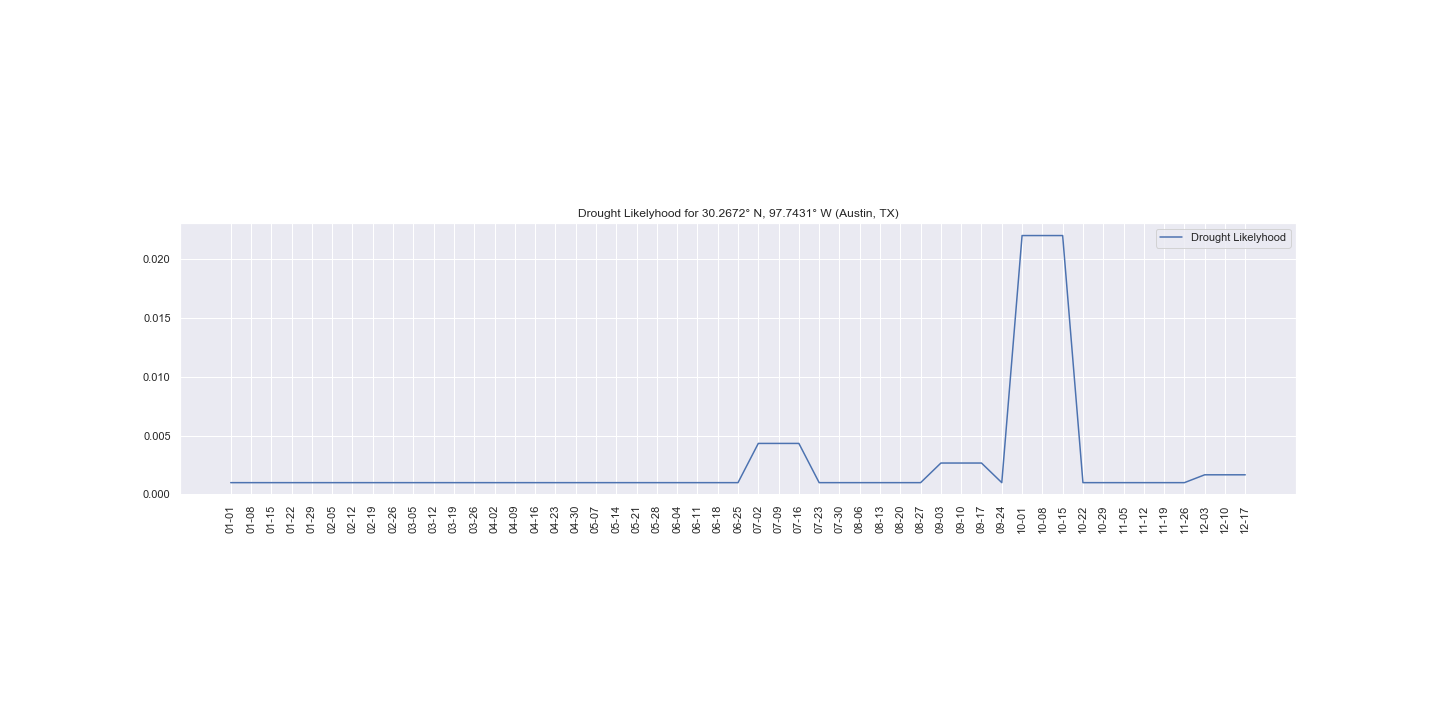

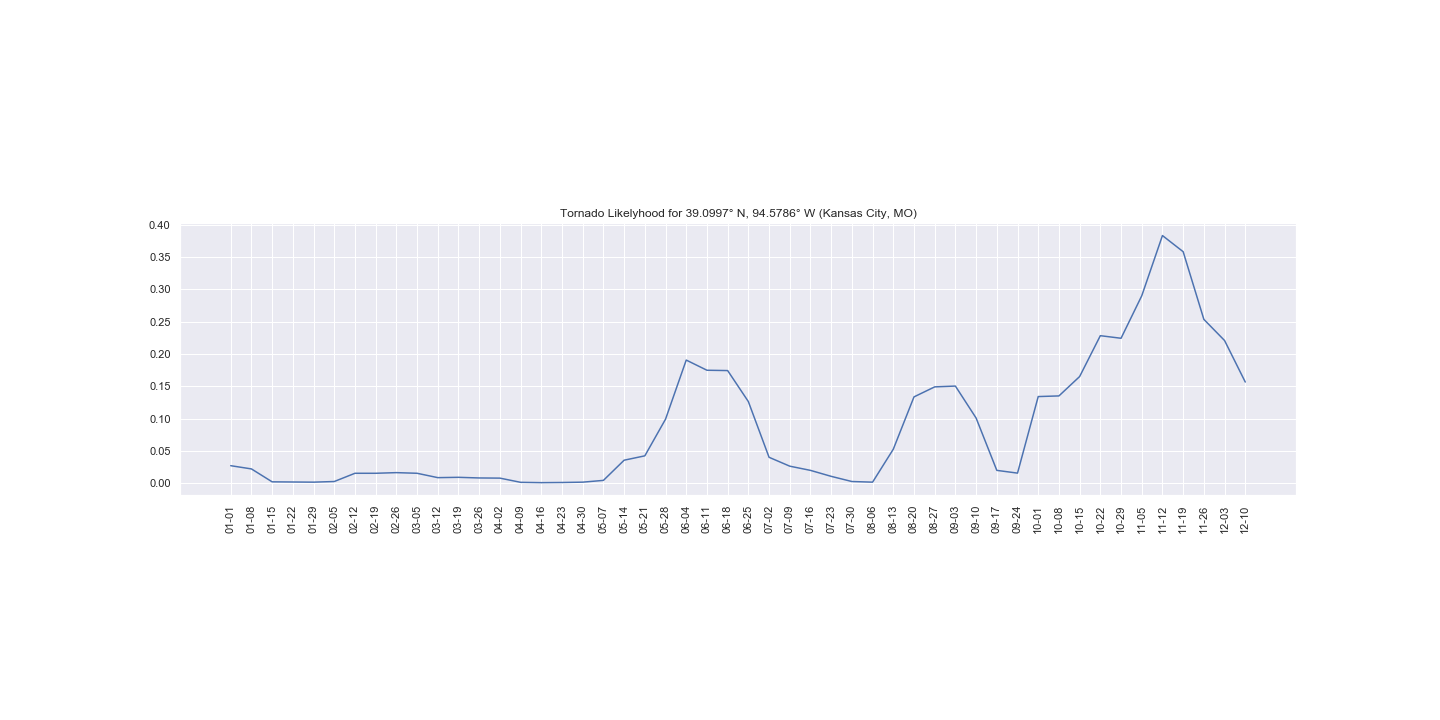

My project in particular focused on the NOAA's Severe Weather Data Inventory, a collection of nearly every severe weather event affecting the United States from 1950 to present day, and which included a wide variety of geospatial and temporal information. My analysis focused on creating sub-selections of data based on event type and date and collecting the resulting events into a matrix representation of the continental United States. Collecting that data by county, I then applied a Gettis-Ord-G autocorrelation statistic in order to attempt to locate significant patterns. A detailed account of the methodology and results can be found in the Final Report PDF linked below.

Final Report PDF Final Report IPython NotebookVertical Search Engine for Tweets

Python, HTML/CSS, Javascript/jQuery, Information Retrieval

This project is an implementation of a search system on a small scale. In this instance, we collected every tweet made by a currently serving Congressperson, indexed them by sender, and implemented a simple flask application interface to allow a user to perform searches. The Python "Woosh" library was leveraged to allow indexing and searching, however as tweets are by their nature very short, a simple Okapi-BM25 search is inadequate to capture relevant tweets. To address this, we applied Latent Sematic Analysis over the indexed tweets to identify strongly related terms and expand the initial query, which increased precision significantly in our final product. A PDF of the final report is linked below.

Final Report PDF Github RepositoryNetwork Analysis of Reddit Posts

Python, Network Analysis, Classification

This project used comments collected from Reddit from October of 2018 in order to see if a basic network analysis of those comments could improve the accuracy of a bot identifcation classifier. After locating a labeled set of users who identified as bots, we extracted a traditional set of text-based features - the number of user comments, time posted, number of unique subreddits, etc - and perfomed a classification using both a Support Vector Machine and a Random Forest Classifier. We then created a network from the same dataset, nodes being individual users and directed edges linking when one user commented on another's post, and extracted features from the resulting network such as Node Degree, Ego Density, Clustering Coefficient, and Triadic Census counts. Additional classification was performed on network features alone, as well as in conjunction with traditional text features to see if the addition of network analysis could improve the accuracy of the classifiers. The resulting analysis indicated on average a 2% increase in accuracy with the addition of the network. A PDF copy of the final report is included below.

Final Report PDF Github RepositoryExploratory Analysis of Greenhouse Emission Data

Python, Data Visualization

This was a smaller project than most, in which we were to locate a data set of significant size and answer a series of self posed questions regarding that data with analysis and visualizations from the Python libraries Pandas and Seaborn. My data came from the US EPA's public air quality dataset, which includes daily readings from 204 sites around the US between 2000 and 2016. The specific questions and accompanying visualizations can be found in the Final Report PDF linked below.

Final Report PDF Final Report IPython NotebookSI506 Final Project

Python, HTML

This project is intended as a cumulative assessment of concepts covered in SI 506 - Introduction to Python. Required components were accessing two different APIs, defining class instances, demonstrating sorting, and creating a human-readable output file. My implementation in particular uses a console input to prompt the user for a query with which to search the New York Times API. The first 10 responses from the API are displayed to the user, sorted by age in days. The user is then prompted to select an article, the keywords of which are used to search the Flickr API for pictures which may be related to the article's content. The output consists of a simple HTML page containing the selected article's title, a brief summary, and a list of photos with associated keywords.

Github RepositorySI507 Final Project

Python, SQL

This project is a demonstration of our understanding of concepts covered in SI 507 - Intermediate Python: APIs, web scraping and SQL. We were offered a fair bit of leeway in our choice of project, as long as it met the basic criteria of obtaining and collating data from multiple sources and displaying it to the user. I chose to pull data on movies from multiple sources - a large set of .csv files from Kaggle, the Open Movie Database API, and Wikipedia - in order to create a data set from which the user can query. Data from these sources was pulled using several python scripts, utilizing the Requests and BeautifulSoup libraries, and inserted into a SQLite database. The database allows references to be made between various movies, user reviews, and cast & crew data pulled from the data sources.

The user interface is a web application, utilizing the python Flask Microframework, with templates and POST requests to allow a user to sort and filter data, as well as navigate to pages by movie title or cast/crew name. Data is pulled from the SQLite database and displayed using a mix of html tables and the plotly.js library to display a variety of simple graphs based on SQL queries constructed based on the html forms. A detailed readme is also provided on the github repository.

Github RepositorySI539 Final Portfolio Site

HTML, CSS, SASS, Javascript/jQuery

You're looking at it. We were asked to create a multi-page personal portfolio site from scratch to demonstrate our understanding of HTML, CSS and related languages. For my site in particular I put a particular emphasis on SASS as an alternative to CSS coding, and jQuery to add an element of interactivity to aspects of the site.

Github RepositoryInteractive Webinar on Copyright

Webinar Presentation, Other

We were asked, with a partner, to give a webinar-style presentation to a group of classmates using the bluejeans interactive presentation software. The topic of the presentation was really secondary - the emphasis of the project was on planning and designing an engaging presentation within a given timeframe.

Webinar RecordingTSUGI Quiz Module Analytics

PHP, Javascript/jQuery, SQL

An attempt to extend the existing quiz module from the TSUGI project to provide the instructor with statistics to obtain a more detailed formative assessment. Parses and stores the quiz results from each student as the quiz is attempted in the module's database entry. The quiz administrator is able to see graphical representations of the collective results to identify questions which were frequently missed, as well as a detailed look at each question to see common mis-conceptions students may have had.

Visuals are created using Chart.js and pulled from the Tsugi database. Getting results required a re-write of the manner in which quizzes were stored in the database - the quizzes had been stored in plain-text GIFT format, however the results view requires the results of all quiz takers in serialized JSON alongside it, which required a minor change to the data model of the quiz module.

Github RepositoryTSUGI Quiz Module Authoring

HTML, CSS, PHP, Javascript/jQuery

An attempt to extend the existing quiz module from the TSUGI project to make the user interface for the existing TSUGI quiz module more approachable. The original interface uses the Moodle GIFT file format input in plain text, which some prospective users found either intimidating or more complicated than they were interested in. At the same time, existing users indicated a preference for an all-keyboard entry. As a compromise, I've designed a html form within the existing TSUGI quiz module that allows for mouse and keyboard entry, while allowing confident users to quickly tab through entry fields.

Github RepositoryOpenGL in C#

C#

A hobby project for my spare time. Using C# and the OpenTK framework, creating a very simple 2D graphics engine in OpenGL. More an exercise than a real project, I enjoy the challenge of interacting with a state machine in an object-oriented language in the most efficient way possible.

Github RepositoryDigital Application Prototype

Interaction Design, Other

The final project from SI 582 - Introduction to Interaction Design. Throughout the semester, we developed and refined the design of a theoretical mobile application. My particular project was a tracking application for groceries, with the goal of reducing household waste from expiring food. Starting with developing storyboards and personas, we eventually moved to creating a wireframe and then finally a fully interactable prototype.